nginx as ingress for Docker compose

In June I wrote about how to use Docker & nginx to deliver statically rendered brotli files for your web (frontend) application. It improves delivery quite a bid, but left me wonder: isn't there too much static WebServer involved?

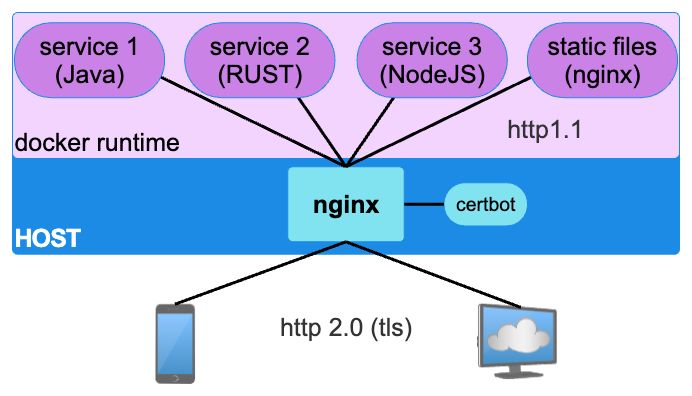

Double hop to deliver static files

A typical web application using micro/mini/midi services looks like this:

It is common, easy and concerns quite separated. However it comes with a set of challenges:

- nginx doesn't do http/2 on

proxy_pass, so you miss the ability to serve static files directly with http/2 - For static file we have two nginx involved

- Each service needs to be exposed to the host at some port

- The service architecture leaks to the host based nginx. SO any change in service needs an update to the

docker-compose.ymlAND the host based nginx configuration - the containers depend on that, external to them, configuration

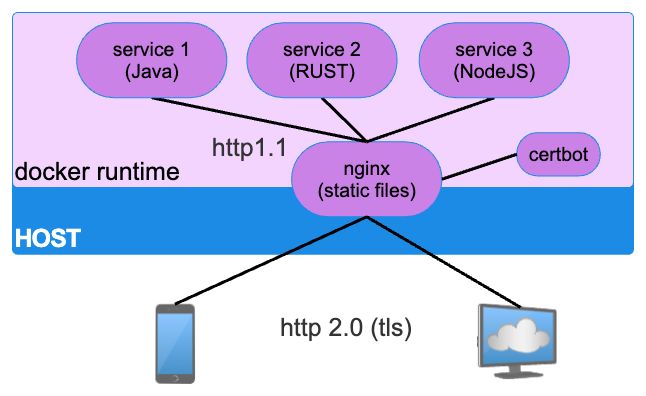

So I tried to design a better way to handle this without going all K-in:

This looed like a more promising approach:

- Services could be addressed with their internal network name

- Only Ports 80 and 443 of one container need exposure on the host

- The nginx configuration inside the container is immutable and can't accidentially be reconfigured in production (your image comes from a pipeline isn't it)

Challenges

- When trying to configure certbot, I initially tried using the

--nginxparameter with an http challenge and shared data mounts. None of the attempts worked satisfactory, so at the end I settled on aDNS-01 using CloudFlare. - Since I wanted the nginx configuration to be inside the container image (and not on a data mount), a good understandig of nginx's configuration is necessary. The only persisted information was

/etc/letsencryptfor the certificate and a secret for CloudFlare credentials - When the nginx configuration is statically configured for TLS, on initial load it will fail since the certs don't exist yet. Auntie Google suggested a manual run of certbot, but I favour

docker compose upto handle everything - I ended up creating my own docker images, which was an epiphany: it absolutely makes sense to build a container image for single use instead of trying hard to make it configurable and vulnerable to mis-configuration

Solution components

The docker file for nginx has been shown before, so a look at the nginx configuation file suffices

server {

server_name app.example.com beta.example.com;

root /usr/share/nginx/html/app;

index index.html;

error_page 500 502 503 504 /50x.html;

error_page 404 /404.html;

location = /50x.html {

root /usr/share/nginx/html/default;

}

location = /404.html {

root /usr/share/nginx/html/default;

}

# Link to microservices (repeat as needed)

location /api/service1 {

proxy_http_version 1.1;

proxy_cache_bypass $http_upgrade;

proxy_set_header Upgrade $http_upgrade;

proxy_set_header Connection "upgrade";

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $scheme;

proxy_set_header X-Forwarded-Host $host;

proxy_set_header X-Forwarded-Port $server_port;

proxy_set_header Host $host;

proxy_pass http://service1:8080/api;

}

listen [::]:443 ssl http2;

listen 443 ssl http2;

ssl_certificate /etc/letsencrypt/live/app.example.com/fullchain.pem;

ssl_certificate_key /etc/letsencrypt/live/app.example.com/privkey.pem;

include /etc/nginx/security.conf;

}

server {

server_name app.example.com beta.example.com;

listen [::]:80;

listen 80;

return 301 https://app.example.com$request_uri;

}

With this configuation we need to get certbot to write to /etc/letsencrypt. nginx only needs to read. To prevent the server throwing errors when the certbot hasn't done its work, we use a shell script to handle this

# Check if the dir is there

while [! -d "/etc/letsencypt/live/app.example.com"]

do

sleep 10s

done

# Start nginx

while true

do

sleep 6h & wait ${!};

nginx -s reload;

done & nginx -g "daemon off;"

In a similar fashion the run.sh entry point script of our custom certbot image is structured

#!/bin/sh

# Runs Certbot renew for example.com

get_cert() {

echo " Getting certs"

certbot certonly \

--non-interactive \

--dns-cloudflare \

--dns-cloudflare-credentials /run/secrets/cloudflare.ini \

--expand \

--dns-cloudflare-propagation-seconds 60 \

--email me@example.com \

--agree-tos \

-d app.example.com \

-d beta.example.com

# ad more -d for more domains here

}

renew_cert() {

certbot renew

}

trap exit TERM

while true

do

if [ -d "/etc/letsencrypt/live" ]; then

certbot renew

else

get_cert

fi

sleep 12h & wait ${!}

done;

Finally the Dockerfile for the certbot. You might want to modify the second line when you use a different DNS provider

FROM certbot/certbot

RUN pip3 install certbot-dns-cloudflare

COPY run.sh /bin/run.sh

RUN chmod +x /bin/run.sh

ENTRYPOINT [ "/bin/run.sh" ]

and last not least the docker-compose.yml that makes everything tick

version: '3.9'

services:

# nginx based container with all static content

ingress:

image: 'ghcr.io/example/ingress:latest'

restart: unless-stopped

ports:

- 80:80

- 443:443

volumes:

- /opt/example/letsencrypt:/etc/letsencrypt/:ro

depends_on:

- certbot

- service1

# Automatic renewal of certificates using Letsencrypt

certbot:

image: ghcr.io/example/certbot:latest

restart: unless-stopped

volumes:

- /opt/example/letsencrypt:/etc/letsencrypt/:rw

secrets:

- cloudflare.ini

service1:

image: 'ghcr.io/example/service1:latest'

restart: unless-stopped

environment:

- SOME_PASSWORD=${PASSWORD}

# Secrets for JWT Handling

secrets:

cloudflare.ini:

file: ${SECRET_ROOT}/cloudflare.ini

When you want to use this in your own project, don't forget to edit your Domain names.

As usual: YMMV

Posted by Stephan H Wissel on 15 November 2023 | Comments (0) | categories: Docker nginx WebDevelopment