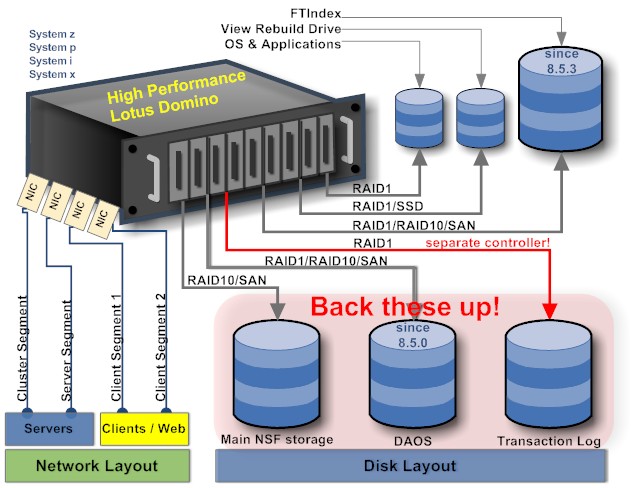

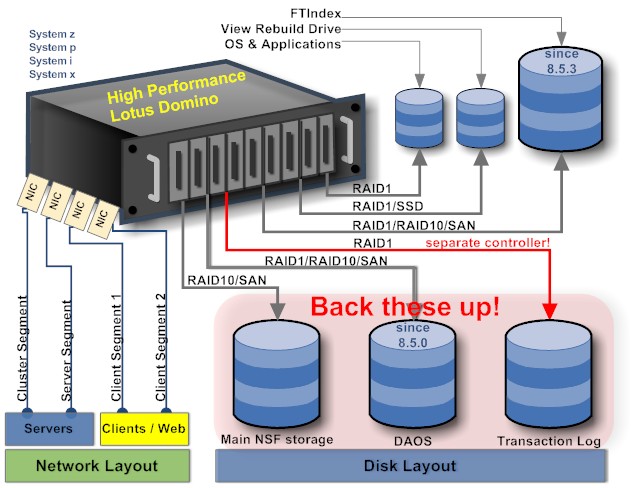

Building a high performance Domino Server

Domino can take huge user populations. To do this successfully all elements of a Domino server have to be considered carefully. Following the old insight " It is always the cable" you need to pay attention to the hardware layout. While you perfectly well can install a Domino server on a low-end laptop or a VM Image, it wouldn't give you the peak performance you are looking for. You rather want something looking like this:

Let us look at the details:

Update: There is now addtional material available how to tune an IBM System x server to peak performance. Update 2: Samir points to a nice comparison between RAID5 and RAID10. It's not Domino related but insightful. One key point there: watch your controller.

Update 3: Added the separate drive for the full-text index

Let us look at the details:

- Disk layout

- Operating system and Applications: This is your first RAID 1 Array. Since data hardly change and are really not that much a small but fast spinning drive will do. RAID1 protects you against failure of one drive and speeds read operations. Some suggest to have separate drives for application and OS, but that might be overkill. You could consider having separate partitions (easy on Linux/Unix).

- View Rebuild Directory: There is a nice notes.ini variable View_Rebuild_Dir. You can point to a separate drive to store the temporary files created during index updates. The default is the system temp directory. This directory is a good candidate for a RAM disk or a solid state disk when your system is updating a lot of views all the time.

- Domino Data: Typically you have a RAID5/RAID10 storage here to accommodate the large amount of data (users demand Google size mailboxes and your applications don't shrink magically). More and more we do see SAN systems for Domino storage, which is OK. Just keep in mind: Don't store Domino cluster databases from different clusters in the same SAN since it defeats the idea of a share-nothing cluster. While we support the use of NAS, the network latency and bandwidth is a limiting factor. Archival servers run fine with NAS, but not your high performance primary production server.

Update: Fixed the graphic to show RAID10 since is shows much better performance than RAID5 - Transaction Logging: You have tried it. Switched it on, expected great things and it didn't perform. The flaw: for good transaction logging performance you need your own disk. Not just another partition, but your very own spindle (RAID1) ideally with its own controller. It would be interesting to see how solid state disks work here.

- Full Text Index: Since Domino 8.5.3 you can move the FTIndex to a different drive. This improves data throughput and reduces fragmentation on your data drive. Add

FTBasePath=d:\full_textto the notes.ini and runupdall -f. Your 100 user server won't notice. Large environments will benefit

- Network layout

- Cluster Replication (If you cluster your server only): You want to have your cluster on its own network segment. If you have 2 boxes next to each other a cross-over cable would do (afaik 1GB Ethernet requires a hub). If your go three-way (highly recommended), then a hub and an IP address segment that doesn't get routed will do.

- Server Network: All servers should be connected on the server backbone. Put them into their own subnet clients can't see. Replication never gets disrupted by clients jamming the network ports. The server network also handles mail routing.

- Client access: If you have huge numbers of clients you might reach the physical capability of your network card or the TCP/IP stack. Use more than one card and/or more than one IP address to have sufficient ports available for clients to connect.

Update: There is now addtional material available how to tune an IBM System x server to peak performance. Update 2: Samir points to a nice comparison between RAID5 and RAID10. It's not Domino related but insightful. One key point there: watch your controller.

Update 3: Added the separate drive for the full-text index

Posted by Stephan H Wissel on 21 April 2009 | Comments (10) | categories: IBM Notes Lotus Notes Show-N-Tell Thursday